- Deep Reinforcement Learning: The Secret to Bipedal Robot’s Soccer Success

- From Walking to Dribbling: How Bipedal Robots are Revolutionizing Soccer

- Agile Bipedal Robots: The Future of Soccer Training and Coaching

- Overcoming Challenges: The Role of Deep Reinforcement Learning in Bipedal Robot’s Soccer Skills

Deep Reinforcement Learning: The Secret to Bipedal Robot’s Soccer Success

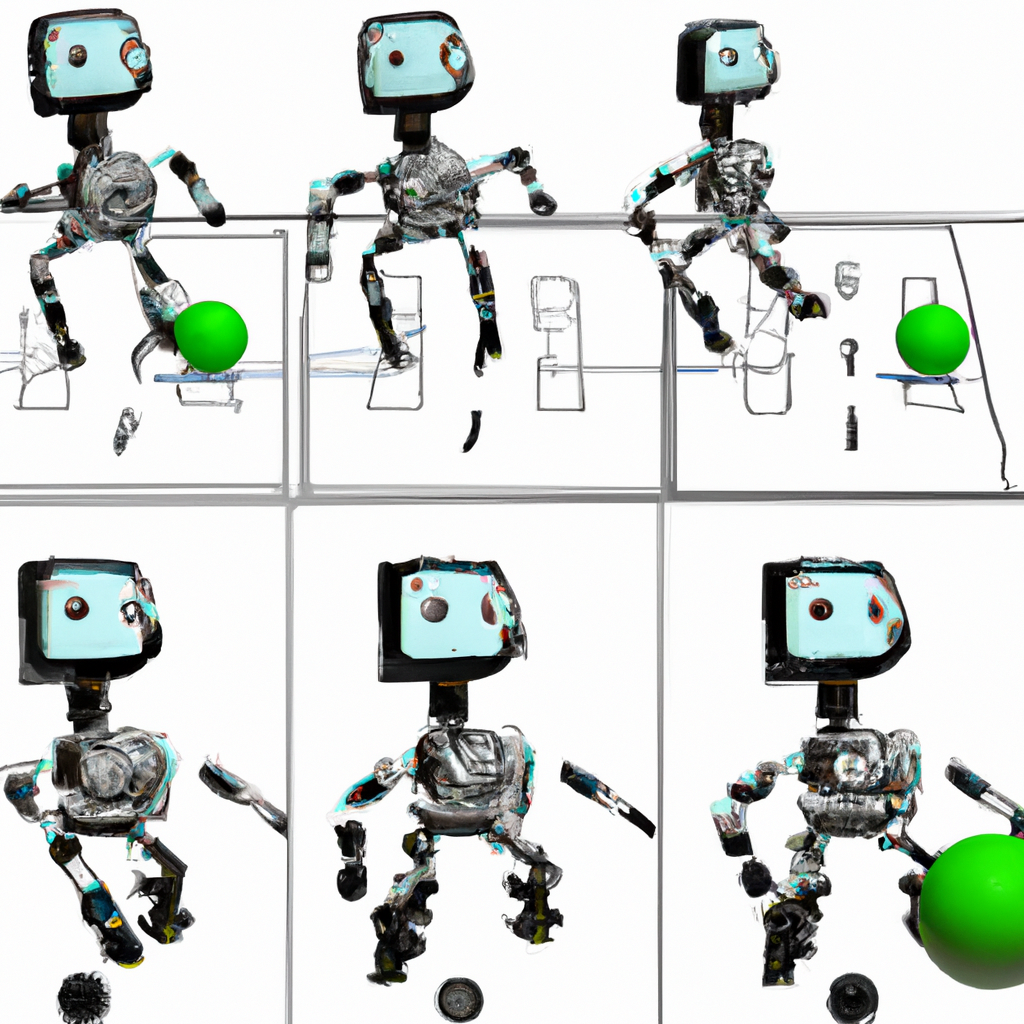

Deep Reinforcement Learning (DRL) has emerged as a groundbreaking technology in the field of robotics, particularly in the development of bipedal robots capable of mastering soccer moves. DRL is a subset of machine learning that enables robots to learn from their actions and experiences, allowing them to make better decisions over time. This learning process is achieved through a reward system, where the robot receives positive or negative feedback based on its actions, ultimately leading to the optimization of its performance.

In the context of soccer, DRL has been instrumental in teaching bipedal robots to perform complex moves and maneuvers that were once thought to be exclusive to human players. By leveraging the power of neural networks and advanced algorithms, DRL allows these robots to analyze vast amounts of data, identify patterns, and adapt their behavior accordingly. This has led to the development of robots that can not only walk and run like humans but also dribble, pass, and shoot with remarkable precision and agility.

One of the key advantages of using DRL in the development of soccer-playing bipedal robots is its ability to handle the uncertainties and complexities associated with the sport. Soccer is a dynamic game that requires players to constantly adapt to changing situations and make split-second decisions. DRL enables robots to learn from their mistakes and improve their performance over time, making them more adept at handling the unpredictable nature of the game.

Another significant benefit of DRL is its potential to revolutionize soccer training and coaching. By observing and learning from the actions of professional players, bipedal robots can develop advanced soccer skills that can be used to train and challenge human players. This can lead to more effective training sessions and ultimately, better performance on the field. Additionally, the use of DRL in the development of soccer-playing robots can provide valuable insights into the biomechanics of the sport, leading to improved injury prevention and rehabilitation strategies.

In conclusion, Deep Reinforcement Learning has proven to be a game-changer in the world of bipedal soccer-playing robots. By enabling these robots to learn from their experiences and adapt their behavior, DRL has unlocked a new level of agility and skill that was once thought to be unattainable. As the technology continues to advance, it is likely that we will see even more impressive feats from these robotic athletes, further blurring the line between man and machine on the soccer field.

From Walking to Dribbling: How Bipedal Robots are Revolutionizing Soccer

The development of bipedal robots capable of performing soccer moves has come a long way, thanks to advancements in robotics, artificial intelligence, and machine learning. The journey from walking to dribbling involves a series of complex processes, including locomotion, balance, and coordination, which are essential for a robot to successfully execute soccer moves. In this section, we will delve into the key aspects of these processes and how they contribute to the revolution of soccer-playing bipedal robots.

Locomotion is the foundation of a bipedal robot’s ability to perform soccer moves. The robot’s walking and running capabilities are achieved through a combination of kinematics, dynamics, and control algorithms. The kinematic model describes the robot’s joint positions, velocities, and accelerations, while the dynamic model captures the forces and torques acting on the robot’s limbs. Control algorithms, such as the Proportional-Integral-Derivative (PID) controller, are used to maintain stability and ensure smooth motion during locomotion.

Balance is another crucial aspect of a soccer-playing bipedal robot’s performance. Maintaining balance while executing soccer moves requires the robot to constantly adjust its center of mass (CoM) and generate appropriate ground reaction forces. This can be achieved through the use of advanced control strategies, such as the Zero Moment Point (ZMP) method, which calculates the optimal CoM trajectory to ensure stability during dynamic movements.

Coordination is essential for a bipedal robot to perform complex soccer moves, such as dribbling, passing, and shooting. This involves the integration of multiple subsystems, including perception, planning, and control. Perception subsystems, such as cameras and sensors, provide the robot with information about its environment, while planning algorithms generate feasible motion plans based on this information. Control subsystems then execute these plans by actuating the robot’s joints and limbs in a coordinated manner.

Deep Reinforcement Learning (DRL) plays a pivotal role in the development of soccer-playing bipedal robots by enabling them to learn and adapt their behavior based on their experiences. DRL algorithms, such as the Deep Q-Network (DQN) and Proximal Policy Optimization (PPO), allow the robot to optimize its performance by exploring different actions and receiving feedback in the form of rewards or penalties. This iterative learning process leads to the development of robots that can perform complex soccer moves with remarkable precision and agility.

In summary, the revolution of soccer-playing bipedal robots can be attributed to the integration of advanced locomotion, balance, and coordination processes, as well as the application of Deep Reinforcement Learning algorithms. As these technologies continue to evolve, we can expect to see even more impressive feats from these robotic athletes, pushing the boundaries of what is possible in the world of soccer.

Agile Bipedal Robots: The Future of Soccer Training and Coaching

As bipedal robots continue to demonstrate their agility and skill in soccer, their potential to revolutionize the way we approach training and coaching becomes increasingly apparent. These robotic athletes can provide unique opportunities for players to hone their skills, while also offering valuable insights for coaches to develop more effective strategies and techniques. In this section, we will explore the various ways in which agile bipedal robots can contribute to the future of soccer training and coaching.

One of the most significant benefits of incorporating bipedal robots into soccer training is their ability to provide consistent and challenging practice sessions. Unlike human players, robots can maintain a high level of performance throughout the entire training session, ensuring that players are constantly pushed to improve their skills. Additionally, the robots‘ ability to learn and adapt their behavior through Deep Reinforcement Learning allows them to mimic the playing styles of professional athletes, providing a realistic and competitive training environment for players at all levels.

Beyond their physical capabilities, bipedal robots can also serve as valuable tools for analyzing and evaluating player performance. Equipped with advanced sensors and cameras, these robots can collect real-time data on player movements, ball trajectories, and other key performance indicators. This data can then be processed and analyzed by coaches to identify areas of improvement, develop personalized training programs, and track player progress over time.

Another potential application of agile bipedal robots in soccer coaching is their ability to simulate various game scenarios and strategies. By programming the robots to execute specific plays or formations, coaches can test the effectiveness of their tactics in a controlled environment before implementing them in real matches. This can lead to more informed decision-making and ultimately, better performance on the field.

Lastly, the development of soccer-playing bipedal robots can also contribute to advancements in sports science and injury prevention. By studying the biomechanics and movement patterns of these robots, researchers can gain a better understanding of the physical demands of soccer and develop more effective injury prevention and rehabilitation strategies. This can lead to improved player health and longevity, as well as a reduction in the overall risk of injury in the sport.

In conclusion, the future of soccer training and coaching is set to be transformed by the emergence of agile bipedal robots. Their ability to provide consistent, challenging practice sessions, analyze player performance, simulate game scenarios, and contribute to sports science research makes them invaluable assets in the pursuit of soccer excellence. As these robotic athletes continue to evolve and improve, their impact on the world of soccer will only become more profound.

Overcoming Challenges: The Role of Deep Reinforcement Learning in Bipedal Robot’s Soccer Skills

While the development of soccer-playing bipedal robots has made significant strides in recent years, there are still numerous challenges that must be overcome to fully unlock their potential. These challenges include maintaining balance during dynamic movements, adapting to unpredictable game situations, and coordinating complex maneuvers. Deep Reinforcement Learning (DRL) has emerged as a powerful tool in addressing these challenges, enabling robots to learn from their experiences and optimize their performance over time.

One of the primary challenges faced by bipedal robots in soccer is maintaining balance during dynamic movements, such as dribbling, passing, and shooting. DRL algorithms can help address this issue by enabling the robot to learn optimal control strategies that ensure stability and balance during these movements. By receiving feedback in the form of rewards or penalties based on its actions, the robot can iteratively refine its control policies, leading to improved balance and overall performance.

Another challenge faced by soccer-playing bipedal robots is adapting to the unpredictable nature of the game. Soccer is a dynamic sport that requires players to constantly adjust their actions based on the changing positions of their teammates, opponents, and the ball. DRL can help robots overcome this challenge by allowing them to learn from their experiences in various game situations and develop more adaptive and flexible strategies. This can result in robots that are better equipped to handle the uncertainties and complexities of the sport.

Coordinating complex maneuvers, such as dribbling past opponents or executing intricate passing plays, is another challenge that must be addressed in the development of soccer-playing bipedal robots. DRL can play a crucial role in this aspect by enabling robots to learn effective coordination strategies through trial and error. By exploring different actions and receiving feedback based on their success, robots can gradually develop the skills necessary to perform these complex maneuvers with precision and agility.

Despite the challenges faced by soccer-playing bipedal robots, the application of Deep Reinforcement Learning has proven to be a powerful tool in overcoming these obstacles and unlocking their full potential. As DRL algorithms continue to advance and become more sophisticated, it is likely that we will see even greater improvements in the performance of these robotic athletes. This will not only contribute to the development of more advanced soccer training and coaching methods but also pave the way for new and exciting applications of robotics in the world of sports.

0 Comments